Digital Fingerprinting & Profiling

The Invisible Empire of Propaganda

The Panopticon Has a New Face

You’re being watched and analysed, from the inside out, by an entity that soon will be so powerful that it won’t need to control what you do — it will simply predict it, shape it, and make you believe it was your idea all along.

You’re being watched when you browse in private mode, — not just when you “accept all cookies,” but even when you decline. Watched when you use a VPN. Watched before you click. Watched after you log off. And the watcher doesn’t need your name, your email, or your face. It only needs your digital fingerprint — the invisible pattern your device, browser, and most and foremost, your behavior leave behind like a scent trail.

Every time you scroll, swipe, or tap, platforms like Google, Meta, and X (formerly Twitter) collect unique fragments of your identity: the model of your phone, your device ID’s, the fonts installed on your computer, your screen resolution, mouse movement, time zone, battery level, typing cadence — hundreds of micro-signals. These signals, stitched together, form a fingerprint more persistent than your username. It follows you across sessions, across devices, across apps and all across the internet.

Originally sold as an advertising tool, fingerprinting has quietly evolved into something more sinister: a behavioral tagging system used to shape what you see, hide what you’re not supposed to, and steer your opinions before you even know you’ve formed one.

This isn’t just corporate overreach. It’s a new infrastructure of soft censorship and algorithmic propaganda — outsourced to Big Tech but increasingly aligned with government narratives, war efforts, and state power.

You don’t need to be arrested to be silenced anymore. You just need to be profiled, scored, and buried in the algorithm.

Welcome to the new panopticon. You didn’t build it. But it knows how to build you.

What Is Digital Fingerprinting — and Why It’s More Dangerous Than Cookies

Cookies are crude. Digital fingerprinting is elegant — and far more invasive.

For years, internet users were told to fear "cookies": small files websites leave on your device to remember who you are. Clear your cookies, use incognito mode, and you could disappear from the grid — or so the myth went. But fingerprinting doesn’t care about cookies. It doesn’t even need your permission.

Digital fingerprinting is the practice of collecting dozens, even hundreds, of subtle signals from your device and from you to identify you uniquely. Your browser version, installed plugins, operating system, screen size, keyboard language, even the way your device renders invisible pixels — all of it becomes data. On its own, none of it is revealing. Combined, it's as unique as a thumbprint.

Here’s the twist: you don’t need to log in. You don’t need to accept anything. You don’t need to be tracked across apps and websites— you are already there. Every time your fingerprint is detected, the system knows it’s you. No name required, - and no matter where you go (on the internet).

Big Tech loves this. Google has invested heavily in fingerprint-based tracking through its “Privacy Sandbox” — an Orwellian name for a system that replaces overt tracking with hidden profiling. Meta uses fingerprinting to track users across the open web, even when they’re not on Facebook or Instagram. Twitter/X injects code into links to monitor how long you hovered, hesitated, or scrolled. They don’t just know what you clicked — they know how you felt before clicking.

This data feeds the core machinery of the modern attention economy. But advertising was only the beginning. Once platforms could identify you with near-perfect precision, they could begin curating reality around your profile.

That’s where fingerprinting becomes dangerous: not just as surveillance, but as a lever of control. If a platform knows who you are — what makes you tick, rage, or despair — it knows exactly what information to amplify and what to suppress to nudge you in a certain direction. You’re not just being tracked; you’re being shaped.

In a world where political influence, public opinion, and even military narratives are mediated by algorithms, fingerprinting has become the invisible backbone of algorithmic propaganda. It is the silent censor, the invisible manipulator, and the key to a future where truth is personalized, weaponized, and sold to the highest bidder.

From Advertising to Psychological Warfare

Digital fingerprinting was born in the ad industry, but it now serves a far more dangerous master: the war for your mind.

Once platforms could recognize you without your name, they began building something far more powerful — behavioral dossiers. These are not just records of what you click. They are real-time models of your attention span, emotional triggers, political leanings, and psychological vulnerabilities. And they don’t sit idle. They’re used — every second — to engineer what you see, what you believe, and what you never even realize you missed.

The line between commerce and control collapsed when behavior prediction evolved into behavior manipulation. In ad tech, it’s called “optimization.” In politics, it’s called “psychological warfare.”

Think back to Cambridge Analytica — the scandal that should have changed everything, but didn’t. That firm didn’t just steal Facebook data. It used personality models to target users with emotional precision. Fearful conservatives were shown violent imagery. Disaffected liberals were fed despair. Every user received a customized propaganda cocktail, designed to polarize, demoralize, or radicalize — depending on what worked.

Today, that model is not an exception. It’s standard practice — only now, it’s more powerful, more granular, and completely legal. Thanks to fingerprinting, platforms can tailor content to *you* without ever asking who you are.

If your fingerprint suggests you’re prone to anxiety, you’ll see content that spikes it. If your behavioral profile shows you respond to moral outrage, your feed will drip with righteous fury. If your scroll pattern implies political disengagement, you may never see dissenting views at all. The algorithm doesn’t care what you believe — only what keeps you hooked, divided, or silent.

This is not a conspiracy. It’s a business model. And in an era of war, unrest, and mass disinformation, it’s also a weapon.

During conflicts — from Ukraine to Gaza — platform feeds are adjusted in ways that mirror state narratives. Content is promoted or buried not by humans but by algorithms trained on fingerprints. You might never see war crimes, protests, or even the existence of dissent — not because it’s censored manually, but because you’ve been flagged as someone who shouldn’t see it. Not “shadowbanned” in the old sense, but algorithmically redirected.

This is the future of censorship: not through deletion, but through manipulative silence. No jackboots. No police. Just a feed that quietly adapts to keep you numb, angry, compliant — or confused.

Fingerprinting made you legible. Profiling made you predictable. Now, propaganda makes you programmable.

Soft Censorship, Narrative Control, and Platform Alignment

Censorship today doesn’t look like a government blacklist or a midnight raid on a printing press. It looks like nothing at all. You post a video — no one sees it. You share a link — engagement flatlines. You shout into the void — and the void swipes past. This is soft censorship, and fingerprinting is its scalpel.

Big Tech platforms claim neutrality, but their systems of visibility are anything but neutral. What shows up in your feed — and what doesn’t — is filtered through algorithms trained on behavioral profiles. Fingerprints give platforms the power to pre-sort truth, customizing reality for each user based on predictive scores.

This creates what amounts to informational redlining. Not every voice is silenced. Just the wrong ones. Not every post is deleted. Just the inconvenient ones — buried under noise, throttled into irrelevance, or quietly denied reach. The user never knows. The target never sees the trigger.

Consider the phrase often repeated by platform execs: “Freedom of speech is not freedom of reach.” It sounds reasonable — until you realize that “reach” is being allocated by opaque algorithms that sort users not by content quality, but by psychometric tags extracted through fingerprinting.

Here’s how it works:

A user shows signs of being “emotionally volatile” — content is downranked to avoid “harm.”

A post contains footage from a war zone — flagged for “contextual review,” suppressed while “under evaluation.”

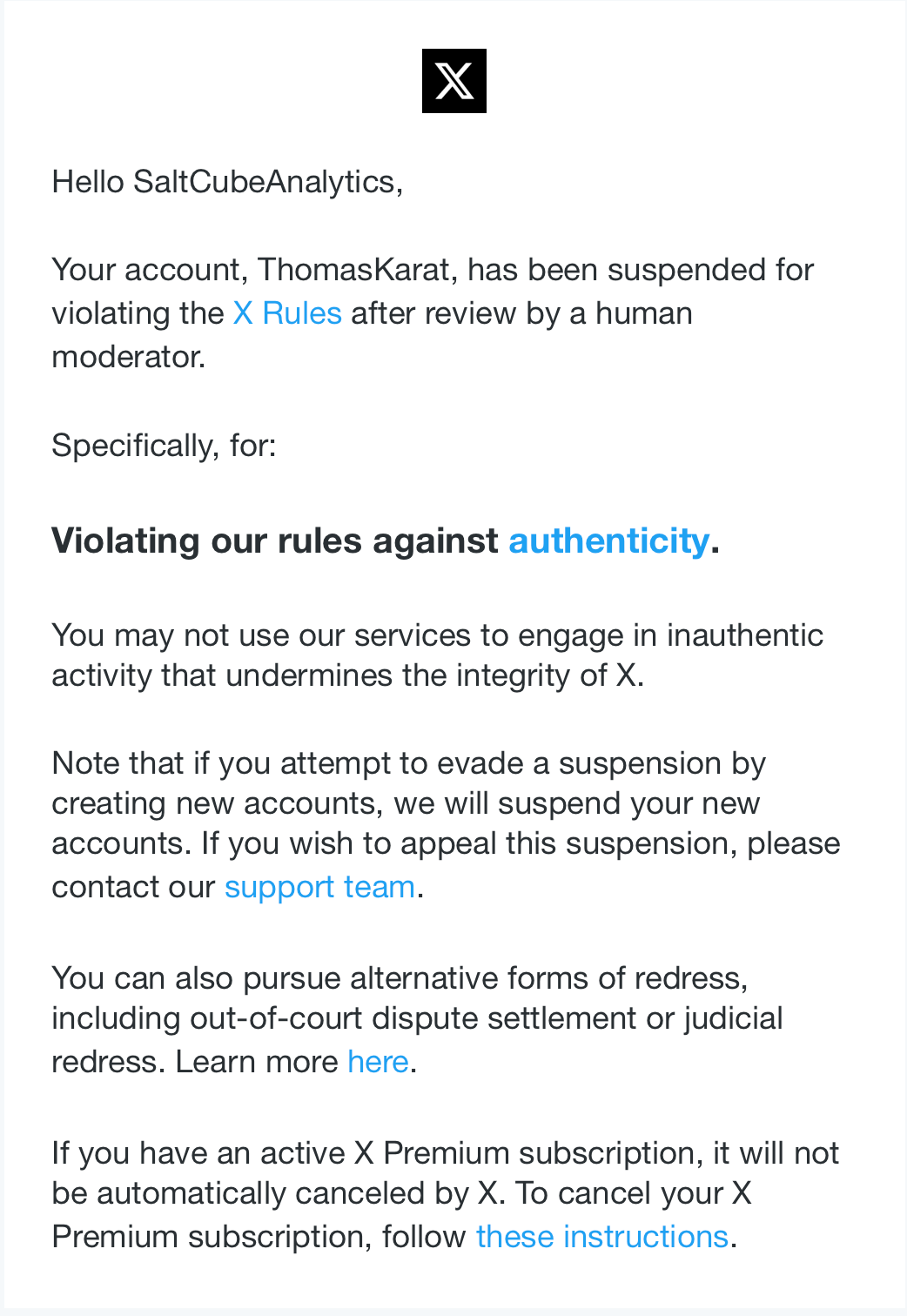

A profile consistently questions mainstream narratives — content throttled to avoid “coordinated inauthentic behavior” as happened to me recently.

So these are not conspiracy theories. They’re policy mechanisms, built atop fingerprint-driven AI models that learn how to invisibly moderate at scale.

Now layer in geopolitics.

During the Ukraine war, anti-NATO posts saw throttling on Facebook and Twitter. In the Israel-Palestine conflict, users reported mass takedowns of Palestinian voices and invisibility of civilian casualty content. These weren’t isolated glitches. They were patterned suppressions — behaviorally calibrated and algorithmically enforced.

Behind the scenes, platforms increasingly coordinate with governments. If you watched the expose that is included above you know just how close this cooperation was from the very beginning.

In the U.S., the Department of Homeland Security has partnered with Meta and X to counter “misinformation” — a word that now encompasses everything from foreign propaganda to inconvenient facts. And because fingerprinting allows platforms to quietly label “high-risk” users, dissent is no longer a problem of speech — it’s a problem of visibility management.

This is how propaganda looks in the digital age: not loud, but selective. Not imposed, but inferred. Not by blocking speech, but by shaping the conditions in which speech fails to matter.

Your fingerprint doesn’t just identify you. It classifies you. And once classified, your truth becomes optional.

V. Toward a Regime of Inference and Obedience

The endgame of fingerprinting isn’t surveillance — it’s inference. And inference is power.

When platforms collect enough signals — not just who you are, but how you behave, hesitate, scroll, and react — they stop needing your input. They can predict your views, moods, and responses before you express them. They can infer your politics without a single tweet. They can assess your “trustworthiness,” “extremism risk,” or “vaccine hesitancy” without any explicit statement. And once you’re flagged, your digital environment is adjusted accordingly — not just what you see, but how you are seen.

This is a new form of governance: not based on law, but on behavioral preemption.

You don’t need to commit a crime. Your likelihood of dissent is enough to get you soft-flagged. Your curiosity about controversial topics becomes a liability. Your passive resistance — lurking without liking, reading without reacting — marks you as suspicious.

This logic mirrors China’s Social Credit System, but here it’s decentralized, invisible, and privatized. You’re not denied a loan or train ticket — you’re denied legibility. Denied amplification. Denied relevance. You become anomalous, which in algorithmic systems is the same as becoming wrong.

This is how obedience is manufactured in the digital age: not through fear, but through design. Not through force, but through friction. Not through punishment, but through invisibility.

The future is not Orwellian. It is frictionless and inferential — a world where control no longer needs to say "no." It simply nudges you until you stop asking.

Conclusion – The Resistance Is Behavioral

You were never asked for consent. You were never warned. Yet every click, hesitation, and scroll fed the machine. Digital fingerprinting has turned your devices into sensors — not just of movement, but of mind. And those signals now power the quiet machinery of control: personalized propaganda, soft censorship, behavioral scoring, and reality engineering.

This is not just a privacy crisis. It’s an epistemic war — over what you know, what you’re allowed to see, and who gets to shape the world you live in.

Fighting back doesn’t mean logging off. It means becoming ungovernable in the language of the machine. Break the fingerprint. Obfuscate. Use tools that randomize or spoof your signals. Support platforms that reject surveillance economics. Push for legislation that bans fingerprinting by default, not just cookie banners that lie.

And above all: stay unpredictable. Behavior is the battleground. If they can’t profile you, they can’t control you.

Big Tech wants your data. Governments want your obedience. What they both fear is your noncompliance — not as noise, but as clarity.

Resist not just what you see — but how you’re seen.

Excellent

I like your suggestion of "Break the fingerprint. Obfuscate. Use tools that randomize or spoof your signals. Support platforms that reject surveillance economics. Push for legislation that bans fingerprinting by default, not just cookie banners that lie.

And above all: stay unpredictable.

The question is, how do you do that? I frequent left and right wing sites, I go to Palestinian and Zionist sites, does that make me unpredictable or just different but still categorized?